Performance Testing

How to approach performance testing in a meaningful way - 11 August 2018

Introduction

What is Performance Testing? This is a question that seems easy to answer, but in my experience, everybody gets it wrong.

This is quite a statement.

I will go into the details in a minute and you can see if you agree with me. But firstly let me describe what I mostly observe.

An organisation identifies that they need to do performance testing. They create a JIRA ticket describing the need to do performance testing. The QA developer starts work on the ticket. He creates a test script using JMeter or Gatling and then executes the test. There will be a part of the script that represents the User and there will be a loop around this script which will represent the Load. The developer will then ask; 'How much load should I apply?' and then things get very woolly. The business owners will come back with some figure that they think represents the average use of the system, and the developer then makes some translation into what that actually means for his tests. He then runs the tests, and the Application falls apart.

The application developers then jump in and sort out some of the issues. They soon hit the performance limits of the architecture they have chosen. They get to a point where they are seeing seriously diminishing returns on their efforts and then they start to question the validity of the test. They approach the QA Developer and analyze the test and then declare that the test is much too harsh. The Delivery Manager gets involved at this point. The deadline has passed and everyone wants to release the code into production. And then everyone realises that there is awful lot of guesswork involved in the execution of performance testing.

The leadership team then declare that we are all reasonably confident that performance is good and we can move on. We compromise the original test either because our application isn't up to the performance requirements or because, legitimately, the test is actually too harsh.

That has been my experience. And I've seen this more than once.

Can I argue that this has been a waste of time? No! We have, in the meantime, done some serious performance analysis on the application, and we are reasonably certain that the application will perform. But we have lost something along the way:

The original question was firstly

How many users can we currently support?

and following on from that

If our application can support so much load then how much headroom do we have?

What we need is a more rigorous approach. What we need is an actual Methodology, where every step is carefully considered and is systematic. We can more readily communicate the process to business owners and more readily defend the analysis we arrive at.

So here are the steps to my Performance Testing Methodology:

- Create User Model

- Gather Statistics

- Establish Current Production Load

- Develop the Test Script

- Validate the Test Scripts and the User Model

- Identify the Failure Condition

- Test

- Conclusion

I should probably clarify, at this point, the context of the system we are trying to test. I'm talking about the application servers that implement the bulk of your business logic and that persist data. They are the servers that service the application to your users and are the actual presence of a system that is available on the web. They are the constrained resource.

I'm particularly not looking at the performance of client-side javascript applications or the analysis of algorithms.

What is a User?

So what is a User? This isn't even the most typical first question. The first question that is asked is; How many users do we currently have in production? And once the business owners come back with an answer, the developer then wonders what did he mean by user? Here are some examples of the answer to that question.

- A person who is registered on the system.

- A person who is logged into the system.

- A person is a currently clicking on something on the application.

These three questions illustrate the issue. A user in this context only matters if they are doing something. We are interested only in active users. Inactive users don't have an impact on the system in terms of performance.

So what is an active user? Again this is hard to define. A logged in user is a user who has a HTTP session available to him or her at a given point in time. But HTTP sessions have typical timeouts of 30 minutes. And most people don't click on logout when they leave a website, they just close the browser. So depending on the average length of a user's session, you can work out the proportion of sessions that are probably inactive. At any rate a simple count of the number of active sessions is grossly inadequate.

Alternatively you could analyse how often queries are made on your application. Is every query a user? Here we are starting to seriously diverge from the statements made by the business owners and we are starting to add assumptions. There is a translation from what the business owners have stated and our interpretation of what that means in technical terms. We are getting closer to something that is workable though.

If you carry on down this line, you will identify that a user will make certain calls during his use of the application. You can identify one particularly relevant call that he might make and declare that to represent your user. This might work to a greater or lesser degree in establishing how many users are currently active, but you still have the problem that you need to write a test script that represents a user.

You have arrived at the point where you need to do User Modeling.

The application developers need to describe the journeys that a user will make in terms of the calls made by each user on the application server. This used to be a case of identifying page loads and form submissions, in the case of server side rendering of web pages. But these days a user may be using Single Page Application technology such as Angular or ReactJS. In this case, the calls will be a mix of web assets, REST calls and maybe event some socket.io.

So the application developers enumerate all the calls that can be made and a set of rules that describe which calls can follow which calls.

Let's have a look at an example; This is the beginning's of a user model for the user of online banking.

LandingPage (get page) -> LoginPage (get page)

LandingPage (get page) -> RegisterPage (get page)

LoginPage (get page) -> Authenticate (submit credentials)

Authenticate (submit credentials) -> LoginPage (get page) //failed to login

Authenticate (submit credentials) -> PortfolioPage (get page) //a page that lists available accounts

RegisterPage (get page) -> Register (submit personal details)

As you can see, it's quite straightforward. In this case it's an old style application. We have page loads and details being submitted in form posts.

Bear in mind that at this point you may have different types of users. An example in my recent experience is a user who logs in and uses your application in the normal way, while another user will click on a link in a email, which deep links into your application, so that he can view only one page. If you don't identify the different modes of use up front, it will seriously throw off your analysis later.

Gathering Statistics

So now you have your user model. You now need to get an idea of the actual levels of activity seen in production. This can be done a number of different ways. The first is through browser instrumentation in the form of Google Analytics. This kind of instrumentation emits events to Google which will collate the events for later analysis. This is great for business intelligence and marketing purposes but is of less use for our purposes as there is a mapping to be done from what the website presents and the calls it makes. This kind of instrumentation is more concerned with page loads and clicks on buttons which can at times be hard to map to actual calls made on the back end servers.

The better form of statistics is to use something like ELK. ELK stands for ElasticSearch, Logstash, Kibana. This is a suite of applications that gather logging and other metrics from your various applications. The actual collection of metrics is done by something like Filebeat, or Metricbeat which then sends the data to Logstash. Logstash then feeds the data into ElasticSearch as its database and Kibana offers a graphing and querying interface to that. For our purposes we are much more interested in the REST calls applications makes rather than button clicks or page loads.

So it would be good to start by shipping your http request logs into ELK. What you then have available is a set of http calls and you can start to compute the times between different related http calls and their variances.

So what kind of statistics are you interested in? You are interested in gathering the stochastic behaviour of your users. You already know what actions they can do, and which actions follow on from which actions depending on their state. But what you don't have is how often the users will make one choice over another. And you don't know how long they will take to make a choice.

You are interested in gathering these statistics (carrying on with our online banking example):

- The probability that after the GetPortfolioList call is made, it will be followed by the GetCurrentAccountTransactions call, or the GetMortgageAccountTransactions call or no call at all. All the probabilities should total 1.

- The length of time it takes from the GetPortfolioList call to any following call such as GetCurrentAccountTransactions.

There are two distinctly different kinds of measures to be made here. The first is a set of outcomes where each outcome has a certain probability.

GetPortfolioList -> GetCurrentAccountTransaction = 0.54

GetPortfolioList -> GetMortgageAccountTransaction = 0.34

GetPortfolioList -> Nothing = 0.12

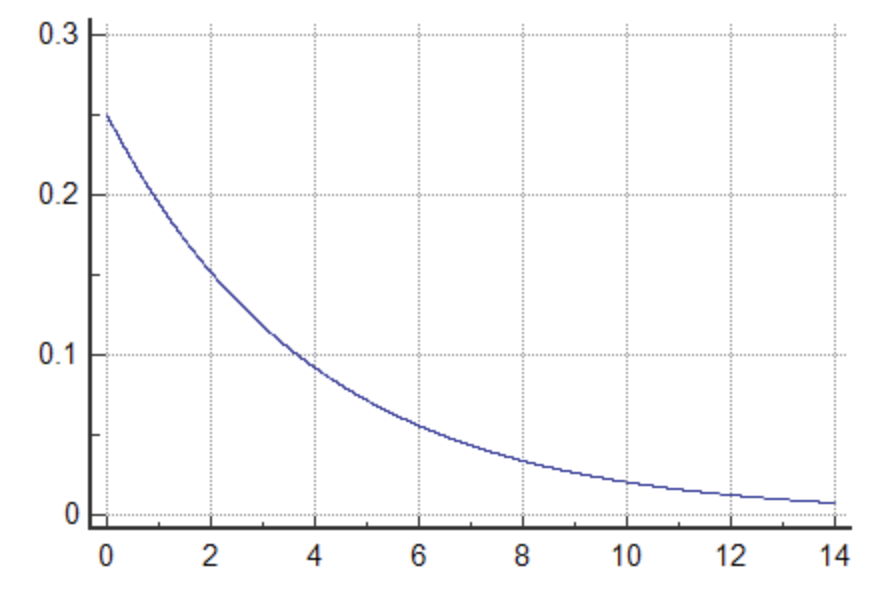

The second kind of measure is the modeling of a time interval which can be viewed as the length of time taken between two events in a Poisson Process. The key point of modeling events in a Poisson Process is that each event is independent of each subsequent event, such as cars passing through an intersection, or customers arriving at a checkout counter. This isn't always true in your user model, but we can make some assumptions here since we are not doing a statistical analysis but rather trying to model stochastic behaviour. So let's proceed. In this case, the time interval can be modelled using the Exponential Distribution.

which has a probability density function of

here is the decay parameter which is the inverse of the mean . So if the average time taken between cars passing an intersection is one every 4 minutes, then

The exponential distribution in this case is:

The x axis represents the number of minutes and the y axis the probability that the intersection will be clear for that time period. As you can see, the more time that passes, the less the probability that the intersection will be clear.

So what we are interested in doing is pulling out of ELK the time intervals between calls for each transition in our user model. Then we compute the average.

So in summary, our user model will have calls that load data and calls that do actions. There are rules for when certain calls can follow other calls. The possible calls that can follow are all determined as a part of user modeling and we need to gather statistics in order to work out the probabilities for situations when there is more than one possible call that might follow, and we need statistics to work out the average length of time that passes between when calls are made.

What is the Production Load?

So at this point, you have instrumented your production system and you have a solid idea of the behaviour of a user in terms of calls made on your application servers. You should then be able to tell what your production load is.

Or can you? Is it as simple as working out some significant event you will use to identify an active user and then calculating the average over the day? Why pick a day? Why not a month? What happens to your user activity during the night? Or for that matter, what about lunch time?

Working out a daily average is only useful for billing purposes. From a performance standpoint, what you need to know is the heaviest level of user activity that you currently support. And 'heaviest' is a vague term.

If you take your total average daily use and divide that by the number of minutes in a day, including nighttime, then you might end up with half the figure you otherwise would if you application is only really active for half the day. Clearly if you take the average daily use, then you could be wildly off. You want to remove certain parts of the day from your calculations. This depends on your user activity levels.

Also, should you calculate the load per second, per minute or per hour? Which time interval is relevant? What we need is a meaningful way to approach these questions. The process should make sense and be easy to explain. A lot of this process will depend on judgement which is subjective, but having a rationale does mean that you end up with a meaningful answer rather than just a guess.

The first major task in this process is to identify some significant event. A user will make many different types of calls on your application. You want to find the type of event that represents an active user. It is best if this call is easy to map to a user. This might be the login call. Alternatively your application might poll the server for updates to the user's state and the frequency of those polling calls might be very predictable. In which case you can gauge with a high degree of accuracy the number of users who are active.

The second problem is to decide on the time interval in which you will count the number of significant events. If the significant event was the login request, then your time interval will be for the length of time your users will have an active session. If you have some other measure that is more accurate, then you have more flexibility, but the trick to bear in mind is that the time interval that makes most sense will have a correspondence with the time it takes for your test script to execute through your user model. That way the numbers you calculate for the load can be directly applied to your test scripts.

Once you have decided on your time interval, you can calculate the total significant events for all your time frames. For example, let's say your test script will roughly execute for 10 minutes, and that is the average length of time for your user's session. Then you divide the day up into 10 minute periods and total up the number of significant events in each period. If you have one significant event for each user, then fine, but if you have a polling mechanism where you know the user will poll the server 5 times a second, then you divide the total you have by the number of calls you expect a user to make in the 10 minute period, and you then have the number of users per 10 minute interval.

You now have a set of totals. You now need to filter out totals that are invalid. This threshold will depend heavily on the user activity levels you see for your application and will be highly subjective. For example, you might have a user activity that numbers in the thousands during the day, but over night, this might drop to a hundred. In this case, you would filter out the low numbers at anything below 750 users per period. On the other hand you might have very low user activity even during the day. In this case it is important that you have at least a count of 10 active users but 30 is probably better. Any period below this number would be culled. You should hope to have at least 10 sample groups at the end of that culling process, preferably 30.

You would then compute the average of all your counts. This is the classic arithmetic average formula.

And you would then calculate the standard deviation of those counts.

You should now have a mean and a standard deviation for the user activity within your time interval.

What we need to do now is identify which probability distribution to use and to work out the 99th percentile from that distribution. The best distribution to take is the Poisson Disribution for this kind of data. But if we have a sample count of over 30 then using the Normal Distribution is the industry standard. So we will use the Normal Distribution. If you do have a load of less than 30, I wouldn't worry about it. The Normal Distribution is close to the Poisson for any sample size above 10.

Why not just take the average? The answer to this is that by definition, half the time the load will exceed the figure. That is clearly not appropriate.

So okay, how about taking 100% as our measure? In this way we can be sure we handle the highest level of user activity. This is the same as picking out the highest count from all our samples. We could do that, but the sample we end up taking might be an extreme event. Or it might not be that extreme, but by picking that number we have picked a statistic that has no information within it that represents the larger group of samples. This is the advantage of using the distribution in that any number derived from it, has modelled the sample population. And if we choose to use the Normal Distribution, then the 100th percentile is infinity.

We are still left with a pressing doubt. It seems we picked 99% almost arbitrarily. Well I did. You could equally pick 95%. Or you could pick 99.9%. The problem with picking a low number is that a significant number of samples will fall above that threshold as in the case of simply picking the average. Picking a too high number means that we stop talking about the general character of the distribution and start catering to an extreme event. The number that is taken has to lie somewhere between these extremes but you can't concretely argue for exactly where that might be. So I take 99%.

Calculating the 99th percentile is a simple matter of obtaining the z score for 99%. That values is 2.33. This is a straightforward task of simply reading the value off the Normal Z-Score tables. You can find them online.

The z-score folmula is

but in our case we need the inverse and we will use our calculated values.

This gives us which is your production load.

For example lets say the mean is 8 and the standard deviation is 4, then using the formula, our 99th percentile figure is 17.32.

This number might seem intimidating at first. If our average is 8 and we somehow ended up at a number that is more than double that, then something must be wrong. Well, keep in mind we need our application to handle the production load at all times (we are saying here that we handle the load 99% of the time) and that for most of the time our application will be under utilized. This is normal. I've also picked some numbers that look very sensitive. A standard deviation of half the mean? I've also broken my rule about having a sample value of at least 10, but this was just or illustration.

Writing the Test Script

Okay, so we have a user model which we think is accurate. Let's create the test script.

How do you go about doing that?

This is where the available testing tools become inadequate. The reason for this is that the available testing tools don't model stochastic processes very well. If I write my performance scripts in traditional tools the script will look something like this:

for 1 to 1000 {

runConcurrently {

User user;

openLandingPage(user);

sleep(1000);

loginSuccessfully(user);

sleep(1000);

number r = random();

if (r < 0.4){

openTransactionsPage(user);

sleep(1000);

if (random() < 0.1){

openPortfolioPage(user)

sleep(1000);

}

...

} else if (r < 0.35) {

openMortgagePage(user);

sleep(1000);

if (random() < 0.1){

openPortfolioPage(user)

sleep(1000);

}

...

} else {

doNothing();

}

...

}

}You can see some of the problems.

- All the wait periods are fixed. You could make them random but the random number would follow a Uniform Distribution.

This is an inappropriate random number to use. - Subsequent steps are composed within steps that precede them. This is forced on you by the if-else statement that represents the choice between subsequent options.

- What you want to model is a state machine. This is empirical code. It doesn't map very well to modeling state machines. I will elaborate on this in a moment. Sometimes the testing tool that you use requires you to express the above in XML. The limitations in expressiveness impact the accuracy of your test scripts.

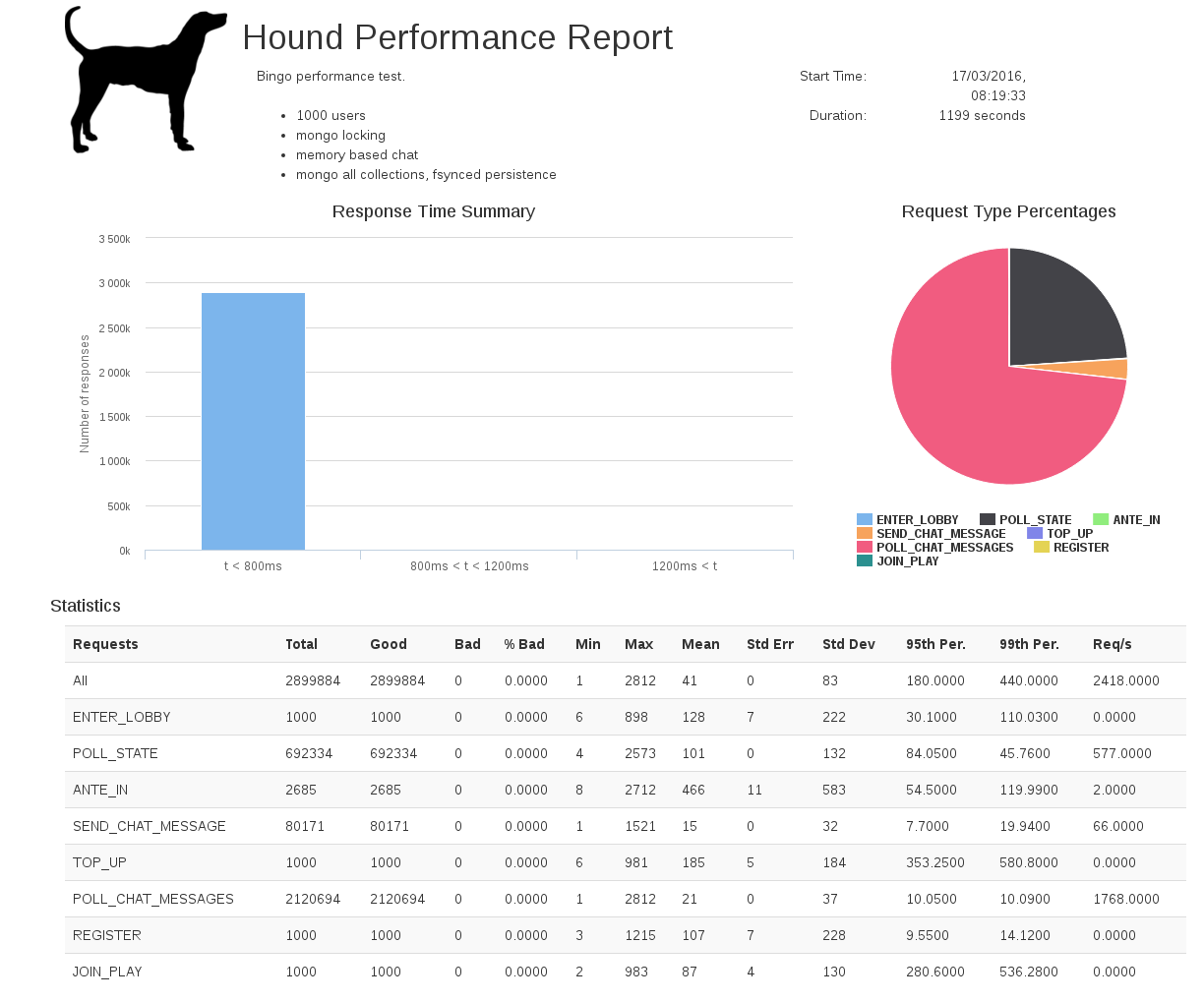

I wrote a performance testing tool called Hound to address these limitations. Hound requires you to model a state machine. In this way you can more easily model and develop a test script that follows your user model. Also I'm primarily a Java developer and I hate coding test scripts in XML.

A few years ago I developed a sample bingo game in ReactJs with a SpringBoot backend and MongoDb database. This wasn't a serious application. It was intended to act as a subject for developing Hound and an exploration into ReactJs. So what I will show you now is a part of the test scripts for bingo. You can see the entire test script here.

This is how you create a test script.

public enum BingoOperationType implements OperationType {

REGISTER, LOGIN, TOP_UP, ENTER_LOBBY, JOIN_PLAY, POLL_STATE, ANTE_IN, POLL_CHAT_MESSAGES, SEND_CHAT_MESSAGE

}

public static void main(String[] args) throws IOException {

Hound<BingoUser> hound = new Hound<BingoUser>()

.shutdownTime(now().plusMinutes(20));

hound.configureSampler(HybridSampler.class)

.setSampleDirectory(new File(reportsDirectory, "data"));

hound.configureReporter(HtmlReporter.class)

.setReportsDirectory(reportsDirectory)

.setDescription("Bingo performance test.")

.addBulletPoint("2000 users")

.addBulletPoint("mongo locking")

.addBulletPoint("memory based chat")

.addBulletPoint("mongo all collections, fsynced persistence")

.setExecuteTime(now());

configureOperations(hound);

range(0, 2000).forEach(i -> {

Client client = new ResteasyClientBuilder().connectionPoolSize(2).build();

WebTarget target = client.target("http://localhost:8080");

hound

.createUser(new BingoUser(i))

.registerSupplier(BingoServer.class, () -> new BingoServer(target))

.start("user" + i, new Transition(BingoOperationType.REGISTER, now()));

});

hound.waitFor();

}This initial code doesn't have any actual details. Those are all enclosed within the call to configureOperations(). What it does do is start 2000 users with the REGISTER transition.

Here is an extract of configureOperations.

private static void configureOperations(Hound<BingoUser> hound) {

hound

.register(BingoOperationType.REGISTER, BingoServer.class, (bingo, context) -> {

bingo.post()

.path("register")

.requestBody(new RegisterRequest("user@me.com", "username" + context.getSession().getIndex(), "password" + context.getSession().getIndex(), "1234567812345678", "Visa", "08/19", "111"))

.expectedResponseCode(204)

.errorMessageOnFailure("registration request failed")

.execute();

context.schedule(new Transition(BingoOperationType.ENTER_LOBBY, now()));

})

.register(BingoOperationType.ENTER_LOBBY, BingoServer.class, (bingo, context) -> {

List<PlayResponse> plays = bingo.get()

.path("lobby")

.expectedResponseCode(200)

.errorMessageOnFailure("enter lobby request failed")

.execute()

.readEntity(new ListPlayResponse());

List<PlayResponse> availableGames = plays

.stream()

.filter(play -> play.getStartTime().isAfter(now().plusSeconds(35)))

.collect(toList());

if (availableGames.isEmpty()) {

context.schedule(new Transition(BingoOperationType.ENTER_LOBBY, now().plusSeconds(5)));

return;

}

context.getSession().setPlay(availableGames.get(randomGenerator.nextInt(availableGames.size())));

context.schedule(new Transition(BingoOperationType.TOP_UP, now().plusSeconds(3)));

})

...

}As you can see, we are registering two events, REGISTER and ENTER_LOBBY. Each event makes the call that is relevant to the operation that is desired. Once that is complete it also sets up a following event. Once you have registered, you will enter the lobby. Once you have entered the lobby you will either wait 5 seconds if no games are available, or you will Top-Up your account with credit. You get the idea; Hound requires you to specify events and rules for how events are linked together. It also takes a time parameter when scheduling a following event. This give us a great deal of flexibility in how we define our state-machine and it gives us a great deal of flexibility in how we determine the period of time between events.

At this point you are probably concerned that your old performance testing tools give you great reports so that you can analyze your test results. Here is the screen shot for the Hound report running the test script above.

There are additional graphs which you can view here.

So let's get back to writing out test scripts. What the above test script doesn't do is model a stochastic process, and what I mean by this is, a process that has randomness in it. Does it matter? Yes it matters.

Lets take an example; Lets say you have 2000 users who use a connection per second. They each use the connection for 100 milliseconds. You need to configure a connection pool size.

You need a connection pool of 200 in order to satisfy this demand. So you might conclude that your system can now handle 2000 users, but it can't. Those users don't arrive at a uniform rate. They arrive haphazardly. There will be times when users arrive at the same time and a connection is not available to one of them. They will then wait for a connection to become available. This isn't much of a problem if the connection is the only resource that that user is waiting for, the user just finds the next available slot and another user will then wait just a little while until they are served in turn. But users don't only hold connections, they hold other resources too. And because a user had to wait a while to obtain the connection, he will consume those other resources while waiting. This cascading effect can cause an escalation in waits across the whole system. This happens when you are pushing your system near to the edges of its capabilities.

The other major problem with fixed numbers as in the script case above, is that a typical waiting time is most closely modelled by the exponential distribution. This distribution has a long tail. You might put in a wait period of 5 seconds, but some of your users will take a much short time period to act while others might take significantly longer. By arbitrarily specifing a fixed wait, you have reduced the quality of your model.

So how do you go about generating the waiting times for your test script?

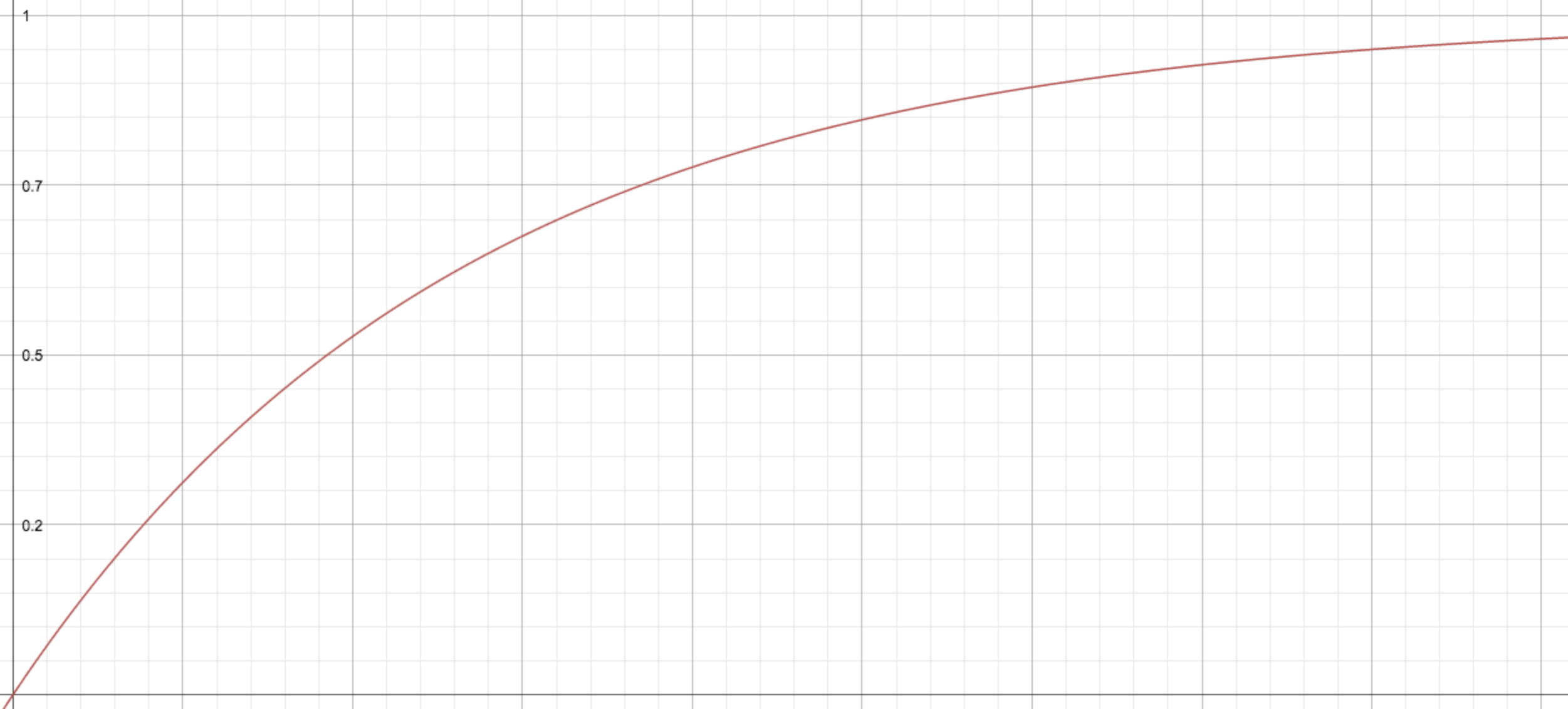

The formula to use is the Cumulative Exponential Distribution Function. It maps the cumulative count of significant events to their probabilities. For example, whereas with the formula above you would ask; What is the probability of the intersection being clear for number of minutes? Now we ask; What is the probability of the intersection being clear of cars for number of minutes or less. (The complement of this gives us the probability of the intersection being clear for number of minutes or more, which is probably more interesting).

So why are we interested in this function? This function has a very interesting property in that it is invertable. If you start with minutes, it will give you a probability, but equally, if you start with a probability, it will give you only one number for minutes. Here is the graph.

So when generating your wait times you will use a random number generator to generate a probability where .

Then you would work out your wait time using this formula where is the decay parameter again.

If the average number of cars crossing the intersection is 4 per minute, then your decay parameter is .

Taking a probability of , then

So you would wait minutes.

So when configuring your events in Hound you would write something similar to this:

hound

.register(BingoOperationType.REGISTER, BingoServer.class, (bingo, context) -> {

try {

bingo.post()

.path("register")

.requestBody(new RegisterRequest("user@me.com", "username" + context.getSession().getIndex(), "password" + context.getSession().getIndex(), "1234567812345678", "Visa", "08/19", "111"))

.expectedResponseCode(204)

.errorMessageOnFailure("registration request failed")

.execute();

} finally {

context.schedule(new Transition(BingoOperationType.ENTER_LOBBY, calculateWait(random(), 20)));

}

})As you can see I've included a calculateWait() function that takes a random number and a rate parameter, 20 per second in this case. I've also included the necessary try-finally construct that is more production ready code.

Checking the User Model

So you would write your test scripts to closely match your user model. You would then set about validating that your user model is correct.

How you would do this is you would run your test script at your average production load against your application in your test environment. You should then plan to have 10 to 30 sample values. These sample values will be counts of a particular call type within a time interval. The time interval taken would match the time interval you used above to calculate the production load number. And it would be closely associated to the time it takes for your test scripts to run through a user's session. So if your time interval above was 10 minutes, then you should run your test for 100 minutes to 300 minutes (1 hour 40 minutes to 5 hours).

Now that you are running your application in your test environment, I should clarify something. There is a problem with any kind of performance testing. The scope of the application under test will always be a limited set of your deployed estate. This is just reality. I've heard arguments along the line of 'We should test as much as possible'. This is naive as it doesn't recognise the associated cost of deploying everything. So inevitably, you will have a boundary to your testing. And when your subject application needs to talk to something beyond that boundary, you will use a Stub. A Stub is typically an lightweight application that sits on your network that has the same outward contract as the application or service it is replacing. This presents a problem for performance testing as stubs generally perform very well. They don't have to do the same work as the systems they are replacing, so runing performance tests against them or against an system that includes them will have an intrinsic inaccuracy. Mitigating this problem, on the other hand, is quite easy. Downstream applications will have an expected SLA. Code your stubs to behave at this SLA level. When a Stub is called by your system, it should behave in a stochastic way. Response times from your stubs should have a mean value and a variance that is inline with expected behaviour in production.

Lets get back to the subject at hand. At the end, you total up your counts by time interval. You would then calculate the mean value for all your sample counts. This gives you one sample mean. It will not match the mean figure you previously obtained exactly. It will differ by some amount, but the question is whether it will differ by too much. How much is too much?

The usual way of determing how much is too much is to calculate a range of values referred to as a Confidence Interval. This range of values mark the upper and lower bounds for your sample value where your sample value should fall within 99% of the time. We say you have generated a 99% Confidence Interval.

Normally you would use a t-distribution for this kind of thing, but in our case, since we have a sample count of 30, it's simpler to use the Normal Distribution. What if you only have 10 samples? I would not worry about it. A t-distribution with 10 degrees of freedom is close enough to the Normal Distribution. A statistician would complain but I want this article to be approachable for software developers.

So again we refer to the Z-score formula

Remember when we calculated the production load? We took as the z-score for the 99th percentile. This was a one-tail measure. We were interested in 1% of all samples to fall above our threshold value. Now we are interested in a two-tail measure. We want 1% of all values to fall outside of a range of values centred on our expected value, which could include a value less than our expected value and also a value more than our expected value. Consequently, we pick a z-score for 99.5%. This leaves 0.5% probability above our threshold. The z-score for 99.5% is . You can pull these z-scores from any online table for the Normal Distribution.

Here is our inverse formula again.

Let's say our previous mean value was 12 and it's standard deviation was 3.

You can then check if your test sample mean falls within your 99% confidence range.

You would repeat this process for each call type your test script makes on your application. When you find that your test mean value falls outside the range, you would adjust the test script by some amount. Half the difference between the expected mean and your test mean would be the right amount. After doing all of this, you could run the test again and double check. You should now be very confident that your user model is representative.

Again we could ask, why pick a confidence interval of 99%? This is a fair question. You could take 90% or 95% as your threshold. The lower the interval you take the higher the risk you will adjust your test and get no-where. Remember that this is stochastic data. It has a natural variance. At some point you can't zero-in any closer to the accurate figure.

Setting the Failure Condition

Onto the next task. We are getting close now. The pay-off is nigh. You are now confident that you can represent a user in your performance testing environment. You know what your production load is. Now you are chiefly interested in what kind of load your application can support until it fails.

The problem now is deciding what the failure condition is.

It should be a statement that goes: 99% of the time we expect the call to complete within 200ms.

Again talking about averages is less useful here. We don't want to expect half our samples to fail. And again, taking an arbitrary extreme value is meaningless too.

This is where the Hound reports help a great deal. For any test run, Hound will tell you the response times included in the test and will calculate these percentiles for you.

Testing

Now for the hard part. This is the intensely boring part that takes ages. We need to run a number of tests against the application at ever increasing intervals.

What I would suggest is a strategy that is similar to a binary search. Pick a load which you feel will be supported and a load which you feel will not be supported. Run at both levels. Confirm that your earlier assumptions about the load were right. If they were right, then run a test at the midpoint between both load levels. Evaluate the new results. If it fails the test condition it replaces your upper bound, and if it passes it replaces your lower bound. Naturally if both your first guesses where wrong, guess again.

Each test run should not run for very long. You are not doing saturation testing here.

Conclusion

In short order, you should arrive at a load level that passes and a load level that fails and the difference between those levels will be short enough that you are not interested in dividing it any further. The passing load level is your system capacity. It is now a straightforward process to subtract your current production load to arrive at how much head room you have.

Going further, you could calculate from your ELK data the rate at which your production load is changing. This will give you an idea of how much time you have until your head room is exhausted.

I wish you lots of head room and little time to increase it. Thanks for reading.